Zingg, Data Meshes, Dagster; ThDPTh #53

Thanks to Prukalpa Sankar, co-founder of Atlan, I stumbled over an article about data meshes which fits very well into what I’m currently working on in a book I am co-authoring (ping! Data Mesh in Action is in early access).

I’m Sven, I collect “Data Points” to help understand & shape the future, one powered by data.

Svens Thoughts

If you only have 30 seconds to spare, here is what I would consider actionable insights for investors, data leaders, and data company founders.

- Nobody cares about data pipelines. Dagster, the data orchestrator puts the real thing into focus, the data asset. And thus moves away from the idea that the “data pipeline” itself has any kind of value. This is a very welcome move, because almost all tools in this space still have the focus on the “transformation” or the “pipeline”, like dbt, prefect, and many others.

- You might not be ready for the data mesh. I do think that every company will at some point in time need to switch to a data mesh. But I also share the opinion that you might simply not have a good case right now for it, depending on your company context.

- Start-Ups have a unique chance to start a data mesh from day 0. However, I do think that start-ups have a unique chance to start off right, being data-decentralized from day 0, and thereby become more data-driven than the incumbents in their markets.

- Zingg and entity resolution becomes interesting. Zingg is a tool that takes the load off my shoulders if I want to match duplicates in my data sets. Better, in the future, Zingg might scale to become better at its job, the more customers the company has.

10 Reasons You’re Not Ready for a Data Mesh

What: Thinh Ha wrote a very interesting piece on data meshes. He focuses on where the data mesh might not be a good fit. (I’ve also written about 3 questions to ask before building a data mesh, and 3 reasons why the data mesh sucks). His arguments focus a lot on decentralization and the costs of it, that it doesn’t make sense if you don’t have the scale, data maturity or a business case for using it.

My perspective: I like most of it, I particularly like the headline “not ready” because it implies what I currently consider to be a good perspective: You will inevitably be driven towards a data mesh, just like almost every company will be driven to a micro service like architecture. But possibly the timing is just not there yet. Like all decentralization moves, there is a trade-off between complexity & flexibility involved, and the costs really matter.

The sensible default option is always to centralize everything, and then slowly break stuff out, be it parts of the frontend for micro frontends, domains and apps for microservices, or data products for a data mesh.

But I disagree on two points, both are related to size. I believe two things:

1. Start-Ups actually have a unique chance to build data meshes from day 1, and thereby become more data-driven than their incumbents.

2. Data Meshes don’t have to be “completely decentralized”.

The thing is, a data mesh consists of 2 sides, the technical side and the people processes & organizational side. Switching people & processes is actually much more expensive than doing technical changes. So switching later on, from a centralized data culture to a decentralized one is indeed a major shift.

However, startups have the unique chance to start out with a decentralized data culture right away. You do that by putting the responsibility for data into the hands of the people using it from the start. You do that by putting an analyst right into marketing, sales & product from the beginning. You do that by giving ownership to these people, and not to a central data engineer.

I have an article on a basic blueprint outlining that in more detail somewhere in my archives, but I truly believe that size simply doesn’t matter for decentralization to work.

Nobody Cares About Your Data Pipeline

What: Dagster, the data orchestrator with a focus on being “data-tool” agnostic, while speeding up a data developer’s workflow, introduced the concept of data assets. The idea behind it can be summarized by two quotes:

“Orchestration to a data engineer is an implementation detail to making a data asset.”

“Nobody cares about your data pipeline.”

My Perspective: I love the second quote. I am managing a data product, and I do remember how hard it was to come up with a “business case to implement a new data orchestrator”, because, well, people don’t care about orchestration. They care about their data. The concept nicely integrates into dagster and puts the focus where it belongs. I like that approach.

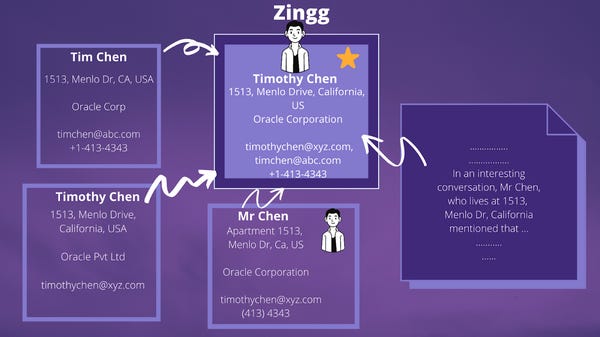

Picture courtesy of Zingg.

GitHub - zinggAI/zingg: Scalable data mastering, deduplication and entity resolution.

What: Zingg is a tool for “entity resolution”. It basically provides you with the tools to train an ML system on your data, even small data, to resolve duplicate entities into one.

There’s also an in-depth introduction with Sonal Goyal at the DataTalksClub.

My Perspective: I really like the kind of machine learning app, if we spin this a bit further. Besides self-training which is valuable in some instances, Zingg can actually leverage large amounts of data across different customers and help me do the duplicate matching without me training anything.

It could leverage distributions, different sets of markers, and the likes to provide extremely fast entity resolution without any training, just by providing sample profiles. Of course, personalized data sharing will be an issue to deal with here.

But the cool thing about having an application that gets better by getting more data is that it leverages true data network effects, as defined by nfx.

I am looking forward to Zingg’s journey.

🎁 Notes from the ThDPTh community

I am always stunned by how many amazing data leaders, VCs, and data companies read this newsletter. Here I share some of the reader’s recent noteworthy pieces that were shared with me:

Nothing here this week.

🎄 Thanks => Feedback!

Thanks for reading this far! I’d also love it if you shared this newsletter with people whom you think might be interested in it.

Data will power every piece of our existence in the near future. I collect “Data Points” to help understand & shape this future.

If you want to support this, please share it on Twitter, LinkedIn, or Facebook.

And of course, leave feedback if you have a strong opinion about the newsletter! So?

It is terrible | It’s pretty bad | average newsletter… | good content… | I love it!

P.S.: I share things that matter, not the most recent ones. I share books, research papers, and tools. I try to provide a simple way of understanding all these things. I tend to be opinionated. You can always hit the unsubscribe button!

Data; Business Intelligence; Machine Learning, Artificial Intelligence; Everything about what powers our future.

In order to unsubscribe, click here.

If you were forwarded this newsletter and you like it, you can subscribe here.

Powered by Revue