You Built a Feature. OpenAI Shipped It Tuesday.

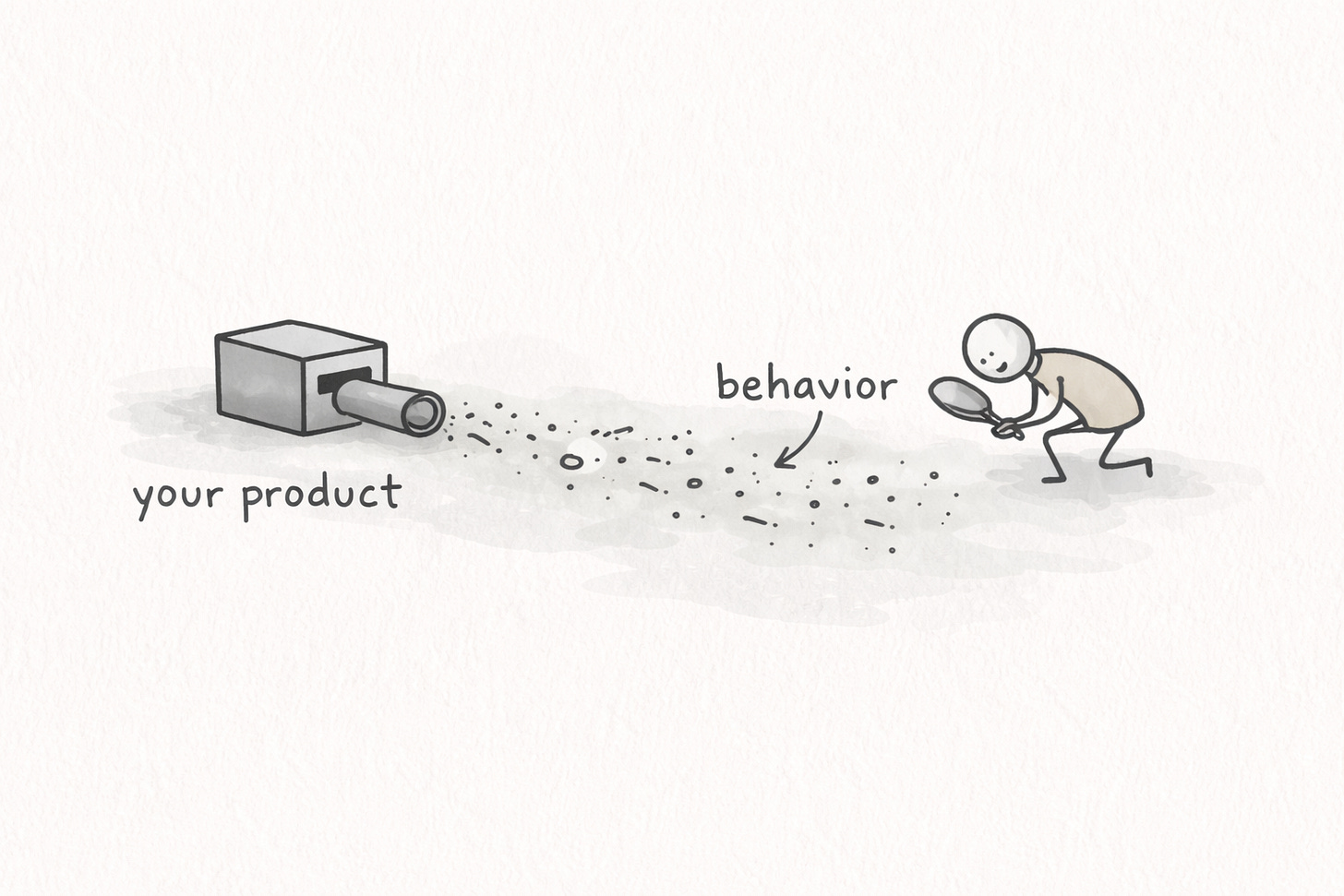

Models & RAGs commoditize. Behavioral data compounds.

Marcus is the Head of AI at a Series B startup. His team spent two months building a RAG system from scraped documentation. Last week, OpenAI released a model that does the same thing out of the box. If you ask him what’s actually defensible about his product now, he’ll stare at the wall in the same Zoom room where he just demo’d it to the board.

I see teams do this every week.

If your advantage is “we built a thing around a model,” you don’t have a moat. Your moat is behavioral data only you can observe. If you don’t you that, you’re toast.

Everything that feels defensible in AI turns into a checkbox fast—fine-tuning, retrieval tricks, prompt scaffolding. Your wrapper gets commoditized. Congrats, you built a feature flag for someone else’s roadmap.

Your behavioral data however doesn’t commoditize. It compounds.

If you only have 5 minutes: here are the key points

Models aren’t moats: Building around a model is not a sustainable competitive advantage—models get commoditized quickly.

Your true moat is behavioral data: Specifically, the behavioral signals that emerge only inside your product. These can’t be scraped or replicated.

Focus on three data streams:

Abandonments: Reveal what jobs users gave up on—not just where they dropped off.

Retries: Surface where your activation flow breaks or where second chances reveal friction.

Workarounds: Point to unmet needs users are solving manually, which are high-signal feature requests.

Mining behavioral data is manual but crucial: It requires reaching out to real users, watching them use your product, and asking uncomfortable but specific questions.

Next step? Send tailored emails to churned users, returning users, and power users to uncover real insights that compound into defensible advantages.

The data moat that compounds: behavioral exhaust

What doesn’t get commoditized? Data that only exists inside your product. Behavioral patterns that emerge only when your specific users interact with your specific features.

I’ve watched companies raise entire rounds on this. Not because their model was better—everyone’s model is “better” for about six months—but because they’d accumulated behavioral signal no competitor could replicate. The gap between them and a new entrant wasn’t code. It was thousands of micro-observations about how real users actually behave.

Here’s what I’d look for first. Three specific data streams create this compounding advantage: abandonments, retries, and workarounds. These signals aren’t in your dashboard at all. You have to go mine them, probably by hand.

But as the saying goes, if your hands aren’t dirty, you’re probably already inside the grave.

How to mine it

Abandonments reveal the job your product didn’t complete. Abandonment doesn’t mean “stopped at this well-defined click path.” It means the person abandoned what they wanted to do. A user might complete every click in your flow and still abandon their goal.

Yoodli started as an AI speech coach. Varun Puri and Esha Joshi built features for interview prep, presentation practice, general speaking improvement. Users came. Most evaporated.

Puri and Joshi could have looked at their dashboards and seen standard metrics. Drop-off at step 3. Churn after week 2. The kind of data that tells you something is wrong but not what.

Finding real abandonments is extremely hard. Mining this requires surveys sent to churned users, discovery calls with people who tried once and never came back, uncomfortable conversations where you hear things you don’t want to hear.

What Yoodli discovered: users who came for public speaching practice often abandoned the general speech coaching entirely. They wanted to practice specific conversations—not become better speakers in the abstract.

So they pivoted. Yoodli is now “Yoodli AI Roleplays”—same founders, same technology, focused on what users actually came for. Google uses it to certify 15,000 sales reps. Databricks and Snowflake use it for onboarding.

The data that drove this decision? It couldn’t be bought. It couldn’t be scraped. It existed only in the behavioral patterns of Yoodli’s own users—and extracting it required talking to actual humans, not querying a database.

If you’re thinking “we track churn already”—nope. Different thing. Churn tells you who left. Abandonments tell you what job they gave up on.

Retries show where activation is actually breaking. Retries don’t always look like retries. Sometimes a retry is a user trying your product multiple times across different sessions before it finally clicks. Sometimes it’s the same person downloading the app, using it once, abandoning it, and coming back three weeks later.

Granola makes an AI note-taking app for meetings. But meetings only happen once. A user doesn’t “retry” the same meeting. So where’s the behavioral signal?

What does Granola do? They follow up. Not with automated drip campaigns—with personal emails. When the Granola team notices someone has tried the product twice but hasn’t stuck, someone actually reaches out. “Hey, noticed you gave us another shot. What happened the first time? What made you come back?”

I know this because they did it to me.

Every conversation reveals friction you’d never find otherwise. “The notes were good but I didn’t know how to share them with my team.” “It worked great on Zoom but I couldn’t figure out how to use it on phone calls.” “I loved it but my boss thought it was recording video, which freaked her out.”

Granola raised $40 million at a $250 million valuation. Not because they had better AI—the transcription market is crowded. They raised because they understood their users’ behavior in ways competitors couldn’t access.

Workarounds are feature requests with proof. When I was building a data platform for 700 people at Unite, I noticed a strange pattern: certain dashboards got used constantly, but only briefly. People would open them, glance at something, and close them within seconds.

That’s weird behavior. Dashboards are supposed to be analyzed, not glanced at.

So I asked users directly. “Show me what you do with this data.” Not “describe your workflow”—show me.

When I asked Jana from the procurement team to show me, she shared her screen: open dashboard, export to CSV, close dashboard, open Excel, manipulate data, paste into a completely different system.

The dashboard wasn’t the product. The dashboard was a waypoint to a workaround.

The workaround IS the feature request. Except it comes with proof of demand—someone already built it themselves.

When I discovered the export-to-other-system pattern, I immediately evaluated integrations with those systems. Built them. They became the most successful parts of the data platform.

I would’ve never found this in analytics. There’s no event for “user left our product and did something useful elsewhere.” You only find workarounds by watching.

You’re up now

Next Monday, I want you to email a lot of people.

Not “send a survey.” Not “add a question to onboarding.” Email humans.

Pick 15 people total:

5 churned last month

5 who tried twice but aren’t active

5 power users

Then send these.

1) Churned users (goal-abandonment)

Subject: Quick question

When you signed up, what were you trying to accomplish? Did you accomplish it before you left?

One sentence is perfect.

2) “Tried twice” users (retry signal)

Subject: What changed?

I noticed you gave us another shot. What went wrong the first time—and what made you come back?

If you reply with 2–3 bullets, I’ll owe you one.

3) Power users (workarounds)

Subject: Can you show me your workflow? (10 min)

Can you screenshare your full workflow end-to-end? Everything before and after our product—especially what you do right after you close it.

I’m trying to find the workaround you’ve built so we can turn it into the feature.

Rule: Don’t ask “why did you churn.” Don’t ask “any feedback.” Ask what they were trying to do, and what they did instead.

When replies come in, bucket them into:

Missing capability (they needed something you don’t have)

Trust/compliance fear (someone else blocked them)

Workflow mismatch (your product didn’t fit how they actually work)

The model you’re fine-tuning today will be obsolete in six months. The behavioral data only you can observe compounds forever.

Feel free to DM me if you feel like this isn’t working for you (though I’ll likely tell you that means you’re doing something completely wrong).