What's wrong with AI fluency

Execution Without Strategy Is Just Faster Failure.

I spent six months working AI into my product management tasks. PRDs got written faster, discovery went smoother, less beta testing was needed. I could feel the impact everywhere.

But when I measured actual outcomes, nothing had changed. Same feature success rate, same growth metrics, same time-to-market for meaningful improvements.

You want to know why? Because I was optimizing execution speed for a fundamentally broken process.

So I took a step back. Inspired by Amazon's controllable inputs framework, I asked five questions before touching AI again:

What's the one key outcome I'm looking for? (Build features that grow the company)

What inputs can I control that measurably impact that outcome? (Feature ideas, customer insights, experiment design)

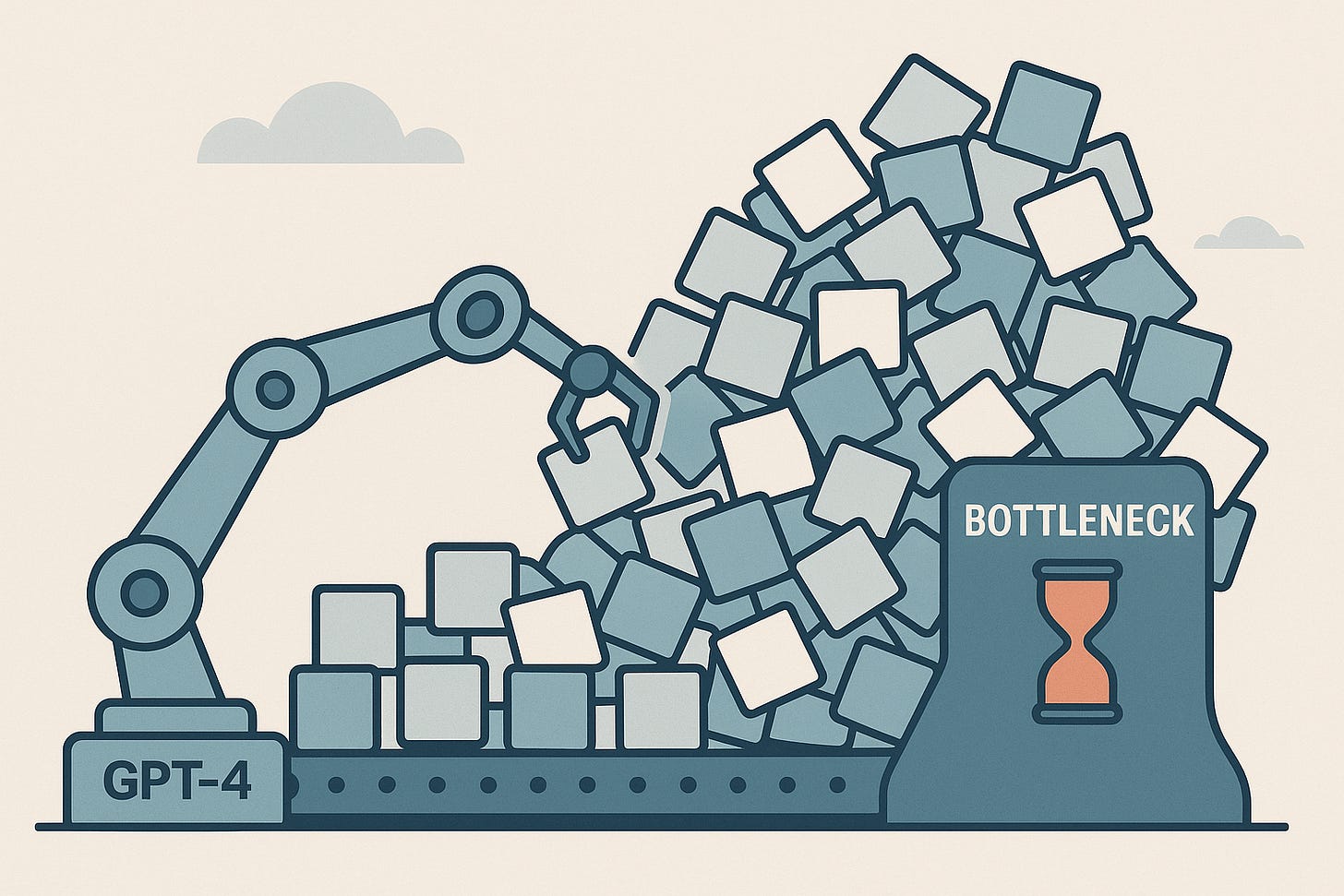

Where's the bottleneck? (Converting inputs to finished, evaluated ideas)

How do I solve this bottleneck with AI? (And then I did)

What changed? Speed picked up, and I started tackling more bottlenecks with focused AI decisions that actually produce better features faster.

This is why Wade Foster's AI fluency framework misses the point entirely. Zapier is measuring how fast people can execute with AI—but execution speed isn't most companies' bottleneck. Strategic clarity is.

Fast execution of bad ideas

The most dangerous hire is someone who scores "Transformative" on AI fluency but can't identify which problems actually drive business outcomes. They'll optimize everything beautifully—in completely the wrong direction.

I've seen this in hiring processes: "Show me how you'd use AI to improve our marketing." Candidates who can chain multiple models and generate compelling campaigns get hired immediately.

But the real question should be: "Walk me through how you'd identify which marketing problems actually matter." Most "AI fluent" candidates can't answer this. They know how to execute, not what to execute.

Here's what a truly transformative AI user would say: they wouldn't mention AI at all. They'd walk through their process—broader input gathering, faster evaluation cycles, better bottleneck identification—and AI would be woven throughout naturally. You wouldn't need to ask them about AI fluency because they'd demonstrate it without thinking about it.

The best AI users don't apologize for using AI or highlight when they're using it. It's just how they work.

Wade's framework creates dangerous false confidence. Leadership sees rapid AI adoption, impressive productivity metrics, and assumes they're winning. Meanwhile, they're just burning through resources faster while solving the wrong problems.

The real constraint

Instead of measuring AI fluency levels, measure strategic clarity first:

Can your team articulate the one problem you solve better than anyone?

Do you know which customer segment has urgent pain for your solution?

Can you identify the controllable inputs that actually drive your key outcomes?

Can you spot the current bottleneck preventing better results?

Only after answering these questions does AI fluency matter. You need strategic clarity before you need execution speed.

The companies succeeding with AI aren't the ones with the highest "fluency scores." They're the ones who figured out their strategic priorities first, then used AI to execute against those priorities faster.

What to hire for instead

When interviewing, just like in customer discovery calls, I use indirect questions. Questions where the content of the answer doesn't matter, but the context does.

"Walk me through how you'd prioritize feature requests for our product." (Do they mention controllable inputs and measurable outcomes?)

"How would you approach improving our customer acquisition?" (Do they identify bottlenecks before jumping to tactics?)

"Describe a time you had to choose between two competing priorities." (Do they have a framework for what actually matters?)

The AI fluency questions come last, if at all. I want to know they can think strategically before I care whether they can execute with AI tools.

The bottom line

Wade's framework assumes your bottleneck is execution capability. But most companies don't fail at AI because their people can't use the tools effectively. They fail because they're using the tools to execute the wrong strategy faster.

You can be "transformative" at prompt engineering while being completely clueless about what problems actually matter. AI fluency without strategic focus is just expensive productivity theater.

The real competitive advantage isn't having people who can use AI tools better. It's having people who know what to use AI tools for.