Stop Hiring AI Engineers. Start Hiring Data Engineers.

Why Employee #3 Should Be a Data Engineer, Not Employee #30.

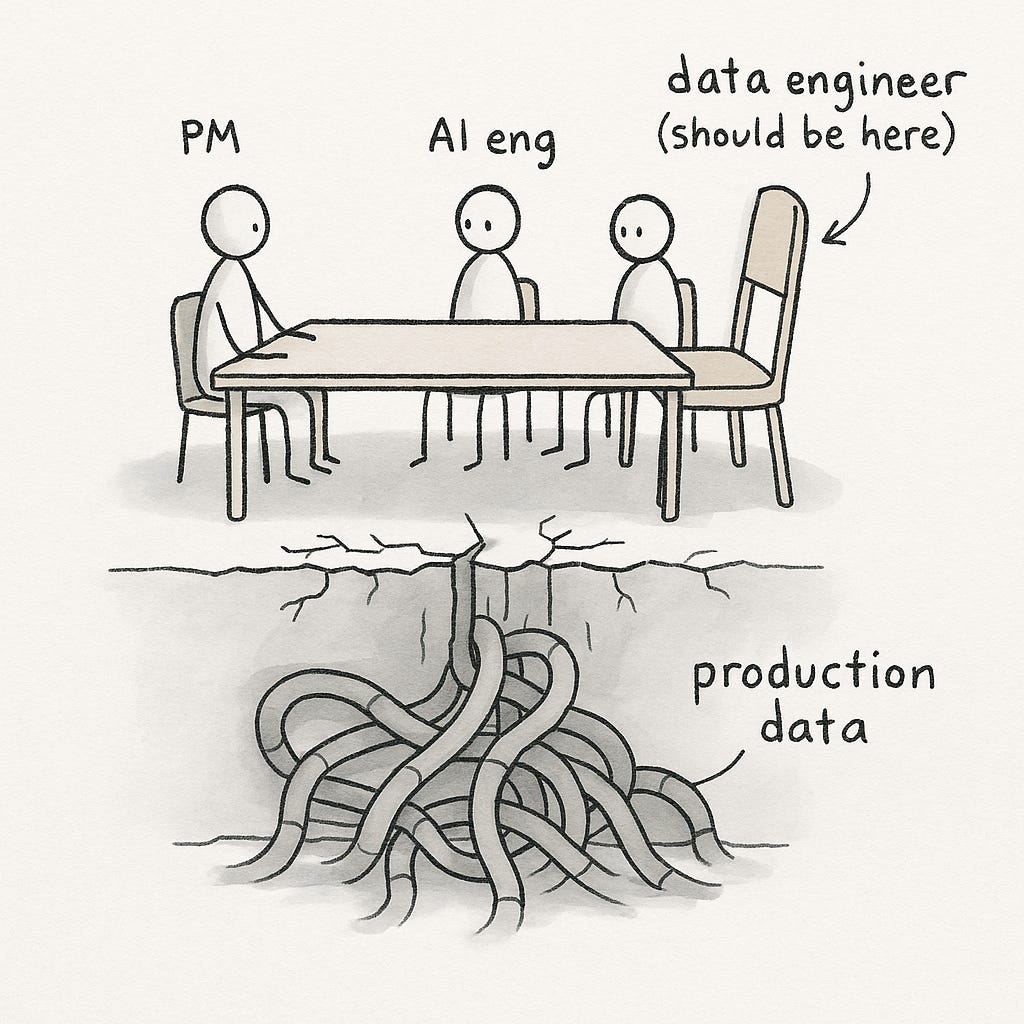

Data engineers should be at the core of your AI team. Not supporting them. Not “helping with infrastructure.” Core team members, from day one, with equal authority. The AI industry got this backwards. Your product breaks on production data that looks nothing like your test set. Deployments take down the system. Your AI team stares at throughput issues they can’t diagnose. Something’s wrong with how the industry structures these teams. Your AI product processes terabytes of novel data every week—from users, from production, from contexts your test set never imagined. And you’re handling it without experts who know how to build systems that process data at scale without breaking. This is the AI-First Trap: optimizing for what AI conferences celebrate while your product breaks on what they ignore.

If you only have 5 minutes: here are the key points

AI teams today lack production-grade data expertise. Most AI engineers are strong in model development and software deployment—but not in handling real-world, evolving, large-scale data.

Data engineers should be core team members, not support. They must be involved from day one with equal authority and decision-making power.

Vendors oversold abstraction. Platforms like Databricks and Snowflake convinced companies they could skip hiring data specialists. This created fragile AI systems prone to data-related failures.

The real failures aren’t in models—they’re in data pipelines. Systems break due to scale issues, invalid inputs, schema drift, and lack of monitoring—issues AI engineers are often untrained to handle.

The best AI teams think like data engineers. Teams that built data infrastructure early on tend to have stable, scalable AI products.

Hire for war stories, not tech stacks. Look for data engineers who’ve debugged real failures, not those focused only on frameworks or architecture diagrams.

Data platforms sold the tools, not the expertise you need

The data platform vendors created this disaster. They made billions convincing companies to skip the people who knew how to handle production data.

Here’s what they promised:

Databricks: “Lakehouse architecture eliminates data engineering complexity”

Snowflake: “Zero management data warehouse - no specialists required”

Azure: “Serverless data processing - focus on insights, not infrastructure”

Supabase: “Backend as a service - ship products, not infrastructure”

Every vendor pitched the same fantasy: our platform abstracts the complexity, you just focus on models. Data engineering became the bottleneck everyone learned to route around. Too slow, too bureaucratic, blocking shipping. AI teams waited weeks for infrastructure that should take days. Executives watched competitors ship faster and demanded speed. The vendors provided an answer: skip the specialists, use our platform, move fast.

The result: an entire generation of AI engineers—software engineers who learned machine learning—exceptionally skilled at building production services but completely unprepared for production data pipelines. They can deploy services at scale but have never built systems that handle data drift. They can handle 10K requests per second but have no framework for validating terabytes of constantly evolving input data. They know production software engineering but not production data engineering.

The platforms handle storage and compute brilliantly. But they don’t solve the unique challenges of production data: schema evolution, validation at terabyte scale, handling the infinite varieties of brokenness that real-world data exhibits. The vendors sold the tools. They didn’t sell the expertise.

LinkedIn hiring followed. “AI Engineer” roles exploded—software engineers with ML skills. “Data Engineer” roles disappeared from startup job boards or appeared as support roles—infrastructure team, reporting to engineering, helping when needed.

You kept hiring for model optimization and production software skills. Your product kept breaking on production data challenges. The AI-First Trap in action.

Here’s what actually breaks:

Failure 1: Your product goes down when data volume increases

Your model works on the test set. You deploy. Traffic increases 3x. The pipeline breaks. Your product goes down.

Your AI team can’t diagnose throughput issues in the data pipeline. They know how to scale services—add instances, load balance, optimize APIs. But they never learned to design data pipelines that scale on unknown input data. They optimized models on carefully curated datasets sized for laptop memory. When production data grows, the pipeline can’t handle the load.

They try adding compute. Increase batch size. Parallelize where they can. The problem persists because it’s not a compute problem—it’s data architecture. The schema doesn’t partition efficiently. The transformation logic doesn’t stream. The validation creates backpressure.

A data engineer sees this in minutes. They’ve built dozens of pipelines that process terabytes. They know which operations scale and which create bottlenecks. They design for 10x growth from day one because they’ve watched systems collapse under success.

Failure 2: Bad input data crashes your product

A null where the model expects a value. A string where it expects a number. An out-of-range value that breaks an assumption. Production incident.

Your AI team never built validation layers for production data. They know how to validate API inputs—type checking, bounds checking, standard software validation. But production data validation is different. User data evolves. Schemas drift. New edge cases appear constantly that no test suite anticipated.

Every edge case is a surprise. Every surprise is a production incident. They patch reactively—handle this null, catch that exception, wrap that transformation. The codebase becomes spaghetti.

A data engineer builds validation infrastructure from day one. Schema contracts that reject malformed data before it touches the model. Sanity checks at ingestion. Monitoring that alerts when distributions shift. They’ve seen every way data can break.

Failure 3: Every deployment is Russian roulette

Thirty percent of your deployments break something. You find out when customers complain.

Your AI team has no staging infrastructure for data systems. They know how to deploy services—blue-green, canary, rollback strategies. But they deploy models to production and pray the data behaves like test sets. It never does.

Data engineers build shadow mode, staged rollouts, automatic rollback on data regression. They’ve seen enough disasters to never deploy without it.

The infrastructure-first trap: When data engineers become the bottleneck

Not all data engineers think production-first. Some will want 6 months to spend on architecture diagrams before shipping anything to customers. That’s the Infrastructure-First Trap—same disease as the AI-First Trap, different job title.

This is why executives learned to route around data engineers in the first place.

The production-first data engineer operates differently. They ship to production on week one with three critical pieces: basic validation, monitoring that alerts on data anomalies, and staged rollout infrastructure. Not perfect. Just enough to catch problems before customers see them. Then they add infrastructure as they learn what actually breaks in production.

Infrastructure-first data engineers want to build for imagined problems. Production-first data engineers build for problems they’ve actually seen destroy systems. You want the latter.

I Thought Unite’s ML engineers were normal

You know what’s funny? When I first arrived at Unite, I thought the ML engineers were like every other ML team I’d seen. Five engineers building production ML systems for thousands of customers, interfacing with lots of other teams. They shipped constantly. Systems just ran. No weekly production incidents. No emergency debugging sessions. Everything nicely integrated into production-grade infrastructure.

Only later did I realize this ISN’T normal.

Why? Their upbringing was different.

They joined when there wasn’t enough ML work. So they spent their first year making production ETL work for 700 people—handling real data failures, debugging data pipelines at 3am, learning what breaks data systems under load. Messy data from dozens of sources. Learning to validate systematically instead of reactively. Learning to deploy data pipelines without gambling.

By the time they moved to ML work (in which some of them had a PhD!), they thought like data engineers who happened to build models. They designed for data scale from day one. They built validation layers before first deployment. They set up staging and monitoring infrastructure for data pipelines. They treated data quality as foundational, not optional.

The AI industry would call their first year wasted—no papers, no models, just boring data infrastructure. That “wasted” year is why their products never broke while everyone else’s did. The industry measures the wrong thing.

Hire data engineers as equals with veto power

Your product breaks every week. Stop hiring more AI engineers.

You know where production-first data engineers come from? They have battle scars.

Put them at the core from day one. Not as support. As equals designing the system alongside AI engineers. They own data architecture, validation infrastructure, deployment pipelines, monitoring. They’re in every design discussion. They have veto power over approaches that won’t scale with production data.

Your interview should test for war stories, not theory:

Question 1: “Walk me through a production data pipeline failure. What broke? How did you find it? What did you build to prevent it happening again?”

Question 2: “I’m about to deploy a model that processes 100K events per second. Our validation currently runs synchronously. What breaks first and what’s your fix?”

Question 3: “Describe a time you prevented a production failure before it happened.”

Red Flags:

Wants to spend a month on architecture diagrams before shipping anything

Resume emphasizes technologies and frameworks over production systems

Can’t describe specific production failures they prevented

Asks about your tech stack before asking about your data volume

Green Flags:

Tells you about debugging pipeline failures at 3am with specific details about what broke and why

Describes data pipeline failures in terms of customer impact

Asks about your production data volume and growth rate first

Can explain schema evolution strategy for your specific use case

The data engineer should be employee 3-5, not employee 15. By the time you realize you need them, you’ve already accumulated technical debt that takes 6 months to unwind. Hire them when you’re building the foundation, not after it’s already broken.

Stop optimizing for benchmarks

The AI-First Trap is measuring success by model performance while customers leave due to data failures.

You can keep hiring for what the industry celebrates—model optimization, production software engineering, API design. Your product will keep breaking every week on data problems.

Or you can put data engineers at the core. Build infrastructure that handles production data at scale without breaking. Design validation that catches data problems systematically. Create deployment practices for data pipelines that don’t gamble on customer surprises.

The AI industry celebrates what gets you conference talks. Your product breaks on what they ignore. Stop hiring for benchmarks. Start hiring for production.

Data engineers at the core, from day one. Or keep wondering why your product breaks every week.

This framing of organizational structure as teh root problem is spot on. The insight about data engineers needing veto power captures something most teams miss when they think infrastructure can be delegated later. The Unite example is fascinating cause it shows battle scars from production ETL create better AI engineers than PhDs without ops experiance. Wonder if there's a broader pattern here about inverting typical team hierarchies.