Meta's 'open' AI fraud

Meta, Mistral, and OpenAI turned 'open' into a marketing shield.

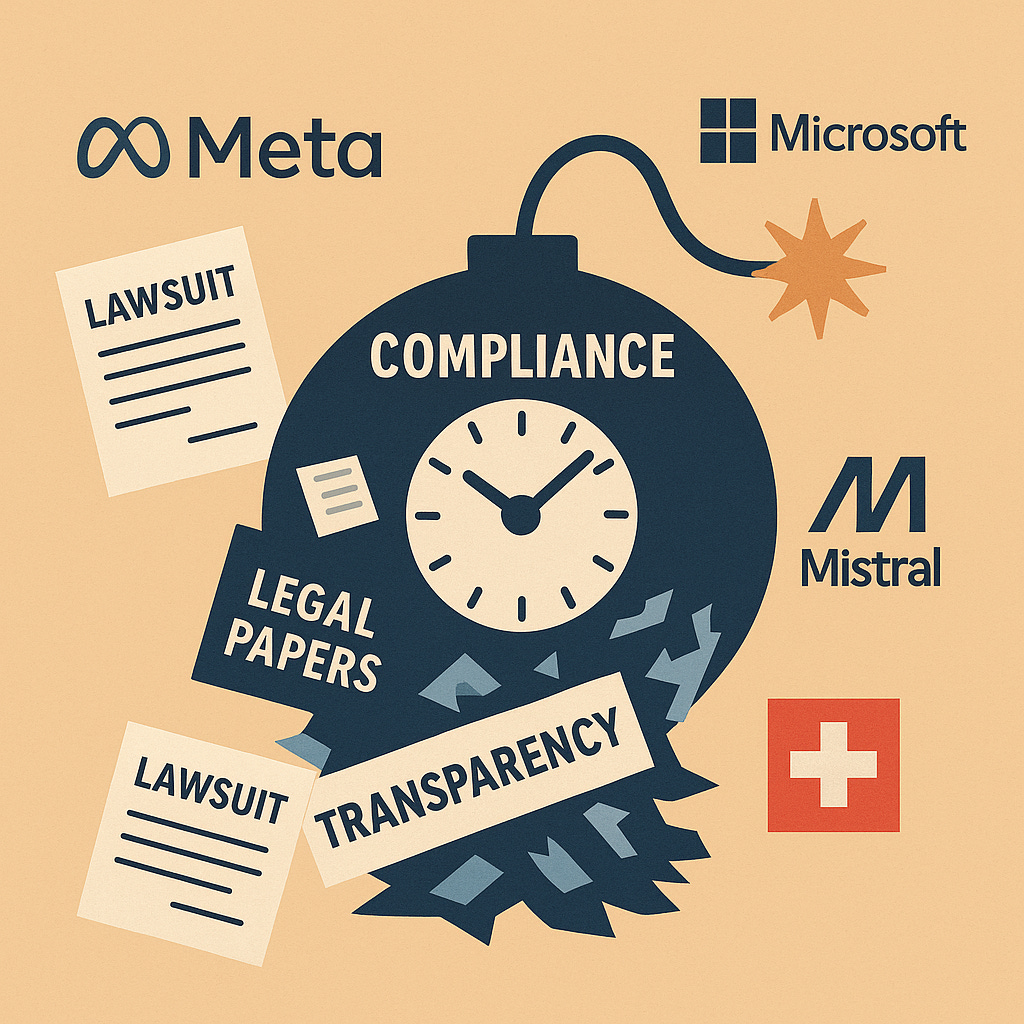

Thomson Reuters just won a major lawsuit against an AI company that couldn't prove where its training data came from. The New York Times is suing OpenAI over undisclosed content use. Getty Images, Reddit, and dozens of authors have filed copyright cases against AI companies for using their work without permission or documentation.

Meanwhile, Meta's entire training data disclosure for Llama: "Publicly available sources." That's it. No consent tracking, no source documentation, no way to verify what they scraped. Six hundred and fifty million downloads built on that level of "transparency."

Switzerland just released Apertus with complete source provenance, retroactive consent mechanisms, and full training documentation under Apache 2.0. The contrast makes the systematic fraud impossible to ignore.

This is the exact open-washing playbook I witnessed as Head of Marketing at Meltano, watching competitors like Airbyte use "open" for growth while keeping essential components locked down. Switzerland just became the Meltano of AI—and accidentally exposed every major AI company as a fraud.

The fraud exposed

Here's what the "open" AI industry actually delivers:

Meta's Llama (650+ million downloads): "Publicly available sources." That's their entire training data disclosure. No consent tracking, no source documentation, no way to verify what they scraped. Commercial restrictions for large companies. Failed the Open Source Initiative's October 2024 evaluation.

Mistral: "Pre-trained on data extracted from the open Web." Zero consent mechanisms, zero transparency about sources. Also failed OSI standards.

OpenAI, X/Twitter: Same pattern. Share the model weights while hiding everything that matters for compliance and reproduction.

The industry shares the easy part (final model weights) while hiding the parts that actually determine legal liability and vendor lock-in. This is identical to the playbook I fought in my time at Meltano—promote "open" credentials while keeping core differentiating components proprietary.

The test they all failed

The Open Source Initiative released their official Open Source AI Definition in October 2024. The results were brutal:

Passed: Pythia (Eleuther AI), OLMo (AI2), CrystalCoder (LLM360), T5 (Google). Notice something? Not the household names.

Failed: Llama2 (Meta), Grok (X/Twitter), Phi-2 (Microsoft), Mixtral (Mistral). Every major "open" model failed basic transparency requirements.

Even this standard is considered too weak by critics—it allows companies to hide training data if it's "legally problematic." But it was still too much transparency for the industry's biggest players.

Switzerland didn't just meet these standards. They obliterated them by building transparency as a core design principle, not a compliance afterthought.

Why the fraud matters

I've seen what happens when companies get fooled by open-washing. At Meltano, we competed against tools claiming openness while locking users into vendor ecosystems through hidden dependencies. The AI version has much higher stakes.

Legal exposure is mounting. Thomson Reuters just won a major copyright case against an AI startup for undisclosed training data use. The New York Times, Getty Images, Reddit, and dozens of authors are suing AI companies over training data they can't audit or verify.

Compliance becomes impossible when you can't answer basic questions like "what data was this trained on?" The Swiss Bankers Association specifically praised Apertus because it can actually comply with strict data protection and banking secrecy rules.

Switzerland shows what happens when you design for transparency from day one instead of retrofitting compliance onto hidden processes.

What Switzerland actually built

Switzerland documented everything: complete source provenance down to individual websites, retroactive consent mechanisms that honor opt-out requests even after training, full training recipes and intermediate checkpoints under Apache 2.0 license, and built-in compliance with Swiss data protection laws and EU AI Act requirements.

You can literally reproduce their entire model from scratch. Every decision is auditable. Every source is documented. This is what genuine openness looks like, not marketing theater.

The smart money moves now

The lawsuits are piling up. Compliance requirements are tightening. Regulators are demanding audit trails that most "open" AI companies can't provide.

Switzerland didn't just build a better AI model. They exposed that the entire industry definition of "open" is systematic fraud. Meta, Mistral, Microsoft, and others have been selling vendor lock-in disguised as freedom.

Companies that understand this shift now will have massive competitive advantages. Those that keep believing the marketing claims will find themselves legally exposed, compliance-violated, and vendor-locked when the music stops.