☀️ From ETL to EL(T), Meltano & Airbyte, B/G Deployments for Data; ThDPTh #10 ☀️

Data will power every piece of our existence in the near future. I collect “Data Points” to help understand this future.

If you want to support this, please share it on Twitter, LinkedIn, or Facebook.

This week I got to think a lot about ELT, ETL, LET, TEL, L….

(1) 🎁 From ETL to EL (T)

Few things in data are as clear as the fact that ETL is an outdated practice. That’s what it is, a practice. All “ETL” tools actually can also do “EL (T)”, but only some tools can only do the “T” and thus enforce the EL (T) pattern. Dbt is one such example of a tool very clearly enforcing the EL (T) pattern.

So what is this EL (T)? For those who don’t remember: In the past 20–30 years, data engineers bought a huge fast computer with lots of memory, then fired up an ETL tool which loaded lots of raw data into memory, transformed it to make it pretty, and then dump the final result which was much smaller than the raw data into a final database. Usually, all of this took one to a few hours.

Because the storage & computational power of the underlying databases increased by a lot, it now is simply not needed anymore to do these computations before dumping data. Instead, EL (T) dumps the raw data quickly into the data pot and then launches a T-only tool to do the transformation. So we get the same stuff as before, but more. This in turn allows EL (T) to enable for instance: Fast access to raw data and the creation of “ad hoc” views that have low development time.

So EL (T) is a great practice everyone should use!

Ressources

The T-only tool dbt.

(2) 🔥 EL — Meltano & Airbyte

So you decided to switch to EL (T)? Great. It’s easy to find a good T tool, but on the EL-side, you’ll have to make some decisions. I like the idea of open-source in this space because ingestion is about having lots and lots of connectors, a challenge that simply cannot be economically solved by closed-source solutions. Pick any of the paid tools and check the integration list, I bet at least 1–2 important sources of yours are missing. If you want something that’s usable, you’ll end up with a shortlist of two:

Meltano, 2019+, started at GitLab

Airbyte, super young project, but as far as I can tell already on a good way, closing in on feature parity with Meltano

To find out more about Meltano watch this Nerd Herd episode which also discusses the weak points of the underlying integrations. The underlying connectors are built using Singer which is a standard that isn’t completely “cleaned up”. If you were to clean it up, you’d end up with something very close to what the guys at Airbyte already did, so they are the second option you should check out.

To complete your workflow obviously you will need:

a transformation tool like dbt.

an orchestrator, for instance, Apache Airflow.

something to visualize stuff on tops of it like superset, looker or Redash

Ressources

Data Nerd Herd with Douwe Maan on Meltano and Singer

Airbytes’ personal take on the state of EL tools, which I mostly agree with.

Airbytes Specification which is basically Singer wrapped into Docker and thus generalizable.

(3) 🚀 B/G Data Deployment

I share this article, not for the fancy technical stuff in it, I’ve seen this deployment of Hive tables before. But the more general points in the article & the implied ones are very important. The general point is: We should keep on using the best practice of deployment in the data domains! It’s not only B/G deployments but:

1. Using blue/green deployments to eliminate downtime The article describes it as “subsecond downtime” but with a caching solution in front, it could become zero downtime…

2. Using canary releases to roll out larger updates

3. Using CD (coupled with blue/green + canary) to push out data often! Because as highlighted in the article, deployment best practices are actually about increasing both quality and speed & frequency of updates.

Using all of the methods, you will be able to increase the quality of your product by a lot. The only two things you gotta watch are where you place your “deployment switch”, and whether you want to actually duplicate “everything behind the switch”. Because duplicating a data lake is probably not a good idea, you can use solutions like lakeFS. For the “deployment switch” you’ll have to get a bit more innovative, but there’s a lot of space to play around. You could use an nginx which in its newest versions supports TCP, presto as a wrapper, or simply wrap the connection strings to your data into a configuration, which you could then B/G route as you see fit.

Hope the article gets you thinking about the possibilities you could have for your data deployments.

Ressources

HelloDev Engineering blog about blue and green deployments for data sets.

LakeFS is an example of versioning & branching data lakes.

Martin Fowler’s elegant description of B/G deployments.

🎄 Thanks & In other news

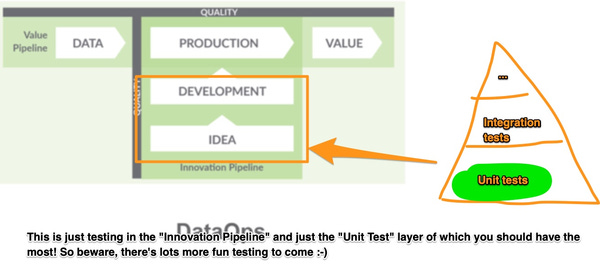

I created a WIP repository to display a typical test-driven workflow for dbt.

What the repository covers… Unit tests to develop fast!

I also just finished another medium blog post on estimating data products. You already know I find data product management pretty important.

This is issue ten of my digest. Really pleased to have you with me. Please forward this on to friends, you think would enjoy this.

P.S.: I share things that matter, not the most recent ones. I share books, research papers, and tools. I try to provide a simple way of understanding all these things. I tend to be opinionated, but you can always hit the unsubscribe button!

Data; Business Intelligence; Machine Learning, Artificial Intelligence; Everything about what powers our future.

In order to unsubscribe, click here.

If you were forwarded this newsletter and you like it, you can subscribe here.

Powered by Revue