Data As Code - A new mental model for data; Thoughtful Friday #26

A mental model to unify a lot of quality and productivity improvements in data, and increase both quality & productivity of data pipelines at the same time.

I’m Sven and I’m writing this to help you (1) build excellent data companies, (2) build great data-heavy products, (3) become a high-performance data team & (4) build great things with open source.

Every other Friday, I share deeper rough thoughts on the data world.

Btw. My book “Data Mesh in Action” just hit Amazon.

Let’s dive in!

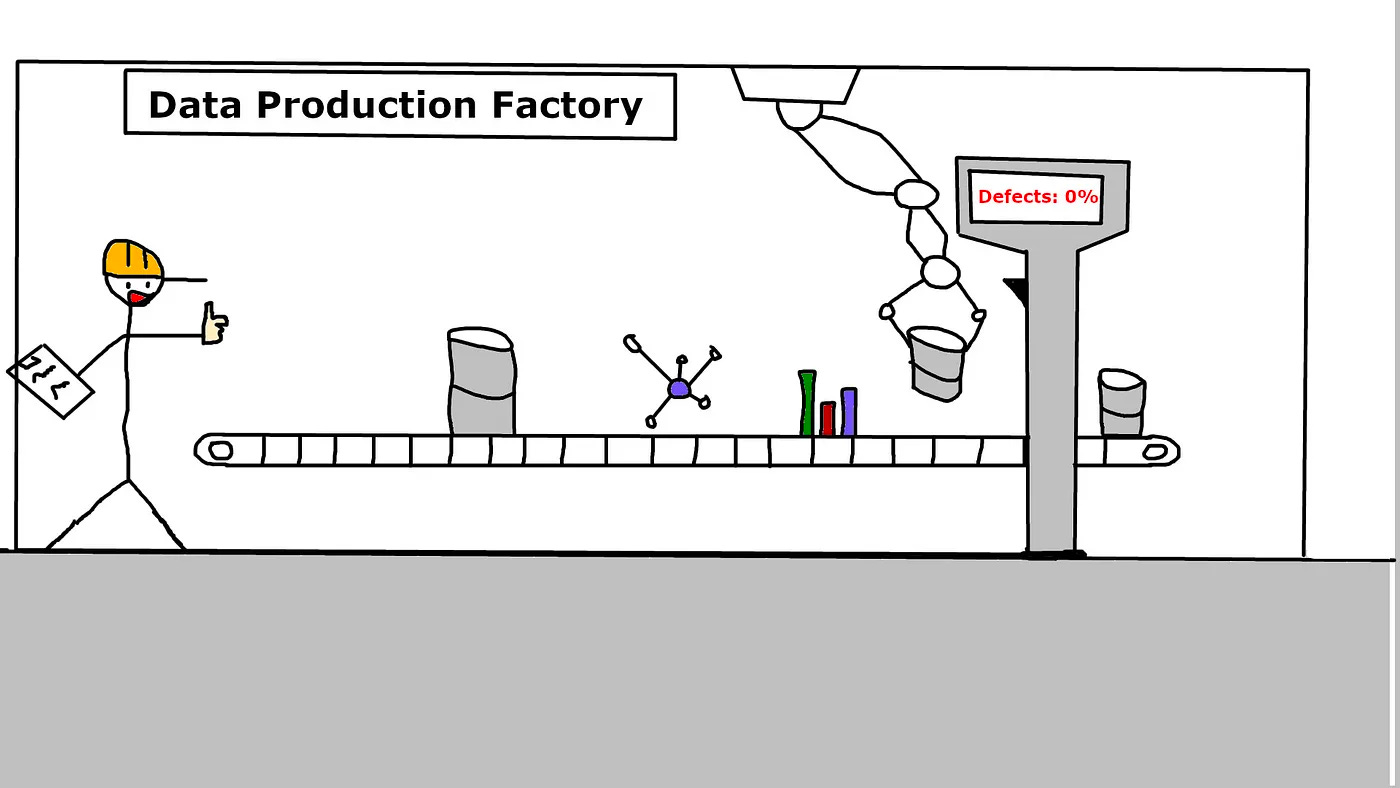

Data as Code is a mental model to improve quality & productivity of data “pipelines” together

Think about exchanging “data pipelines” mentally for “data delivery pipelines”

If DaC resonates with you, then today is a great day to think about implementing it.

If DaC does not resonate with you, then you should ignore this!

🔮🔮🔮🔮🔮🔮🔮🔮🔮🔮🔮🔮🔮🔮🔮🔮🔮

I don’t hate Jazz, it’s an okayish music style. But I’m not a big fan either, just like most people. But the people who do like Jazz, love it! And sometimes, in a bar in Havanna, in a chill-out lounge, Jazz simply is the right choice for the context, no discussion.

I’m introducing you to “Data as Code” (DaC) today. DaC is simply a mental model that might help you, or not. Just like Jazz, if it doesn’t resonate with you, then you can probably ignore it. People who like it see pretty big benefits. And for some applications, the DaC mindset simply is the mindset to use.

DaC means stopping only about “data pipelines” and DAGs and start to think about data delivery pipelines.

But because we’re lacking a unifying toolset (there are plenty of isolated atomic tools), you can and should implement DaC in whatever way makes the most sense given your current toolstack. It is, after all, a mental model, supplemented with a set of practices that can be implemented in almost all tools.

If you take nothing else away from this article, consider the following question: Do you have any serious “data pipeline” that would benefit (a lot) from increased productivity and quality at the same time? That’s what DaC does, and by far not everyone needs this

DaC is “just like Infrastructure as Code”

Data as Code is just like Infrastructure as Code.

DaC is the approach of defining your data through a piece of source code that can be kept in version control and treated like any other software component. It allows for auditability, reproducibility of results, of data, it becomes subject to testing practices, doesn’t break production systems, and can become subject to the full continuous delivery machinery.

This does NOT mean you keep your “all your data” in version control! It means, if you do functional data engineering, you keep your partition key (that will be unique) in version control. It means you keep your lakeFS hashes in version control, your Apache Iceberg dates, etc. Just like with infrastructure as code, where you don’t actually “store machines in version control” you keep some pointer, some abstraction in version control that allows reproducibility.

But that alone doesn’t make much sense. The key mindset shift IMHO involved in DaC is that around “data pipelines”.

Exchange data pipelines for data delivery pipelines

Everyone talks about “data pipelines”. By that, most people mean a DAG, a graph that takes data, manipulates it, and puts it somewhere else. All of these steps are basically the “logical operation of your data application”.

If you were to look at a software application, this is a combination of the two process steps of “building our output” and “deploying it”.

And that’s all there is to the flow of data.

But that’s not true. A “data delivery pipeline” is an automatic manifestation of all steps necessary to get data from its creation (logged in source control) into the hands of users.

The “build” and “deployment” parts are then just two small steps along a long pipeline that ensures data is delivered quickly and with high quality.

Your data delivery pipeline should also include testing, integration (& testing) into other components, possible deployments into sandbox environments, it could include A/B deployments, it could include everything that you decide makes your workflow.

Every time your team has to fix something related to newly ingested data by hand, there usually is an automatic step you can add to the data delivery pipeline to stop related problems from occurring again.

Exchange or for and

If you implement DaC, one of the first things you probably do is to implement a concrete “deploy step” in your data delivery pipelines.

This means a couple of things that sound super exciting to me: automatic rollback for data deployments and deployments to sandbox environments with testing before deploying to production.

But for a lot of people, these things don’t mean anything. At best an added burden. After all, you can easily test data in production, 2-3 hours of downtime on old data don’t mean too much for most BI systems, and replaying a backup doesn’t take too much time either.

So why use this different mental model? Because at core it provides something else: inspired by lean manufacturing it solves a simple problem: Previously quality and productivity have been trade-offs. Increase your speed and you will make more mistakes, thus reducing the quality.

However, manufacturing has figured out that by using automation, essentially great processes, you are able to increase productivity by making fewer mistakes. And you do that by automating the hell out of testing, and most problematic steps.

That’s what DaC provides you: A mindset that will help you increase the final quality delivered to end users while also delivering more value over time to them.

If data doesn’t break your application that often, and you do not aim to increase the frequency at which you deliver data, then DaC will probably be a burden to you.

Why DaC now?

Apache Iceberg and time travel features of a lot of table formats are all over the place. And yet, the actual benefit this brings, having production systems that restore way faster, isn’t advertised nearly as much.

Tristan Handy recently wrote about “don’t build observability, build things that don’t break” (paraphrased).

Well, DaC is the way to do it.

Dagster introduced “software defined assets” making a big step towards realizing the “data delivery pipeline”.

LakeFS matures more and more, and has been talking about CI/CD, A/B deployments and all of this fun stuff for years now.

The technologies are everywhere. All of them realize that data is different because it is huge. They all operate using metadata operations to make a DaC mindset easily implementable in many different toolsets.

At the same time, data is becoming exponentially more important to every company.

That all makes today the perfect day to see whether you can try out the DaC mindset on one of your important data components.

No need to make a huge shift, just pick your recommendation engine, your pricing algorithm, your most important dashboard, whatever it is that is the 80/20 in your data applications. Work through it and see whether you would like to fix problems with data faster, get more recent data into it, get more quality into its data.

Who’s already doing this?

I haven’t seen anyone doing DaC under the DaC name tag. If you are, please let me know I would love to see it!

But there are a lot of movements under a large set of different tags that do this. So maybe you can consider this a good unifier, a wrapper around things like:

Running dbt inside a CI system. (“building the data”)

Doing CD4ML (a 1-1 application of DaC, just limited to machine learning).

Almost everything the lakeFS team is writing about.

Functional Data Engineering (is essentially versioning your data through partitioning).

and many more…

If that is you, maybe Data as Code helps you to put things into perspective and quickly enhance your workflows. Or not. Feel free to talk to me about it.

Final note about the tools: I personally see the benefits of using traditional software engineering tools like GitLab CI, GitHub Actions etc. to implement DaC. But there is no reason to not include any of this inside your data orchestrator. Or whatever tool you use. No reason to not utilize your data observability tools inside this chain to make all of this work.

It’s a mindset shift, not a tool.

How was it?

I find myself writing a lot more lately, so be sure to check out my articles in other places. Here’s what I churned out since the last newsletter edition:

How To Become The Next GitLab -31 “Simple” Steps to Build A COSS Company

Top 8 Skills To Stand Out As Data Engineer -

Learn these skills to stand out as a mature data engineer. Once you’ve mastered the basics of data engineering, this is how you advance and stand out.

Why You Should Occasionally Kill Your Data Stack -

Three inspiring chaos experiments to help any data team make their stacks more resilient.

High-Performance Data Teams Don’t Care About Data Quality -

High-performance data teams focus on the same four metrics high-performance software teams focus on. But they understand how these metrics behave different for data teams.

Shameless plugs of things by me:

Check out Data Mesh in Action (co-author, book)

and Build a Small Dockerized Data Mesh (author, liveProject in Python).

And on Medium with more unique content.

I truly believe that you can take a lot of shortcuts by reading pieces from people with real experience that are able to condense their wisdom into words.

And that’s what I’m collecting here, little pieces of wisdom from other smart people.

You’re welcome to email me with questions, raise issues I should discuss. If you know a great topic, let me know about it.

If you feel like this might be worthwhile to someone else, go ahead and pass it along, finding good reads is always a hard challenge, they will appreciate it.

Until next week,

Sven